Hi, focusing in this part of your question:

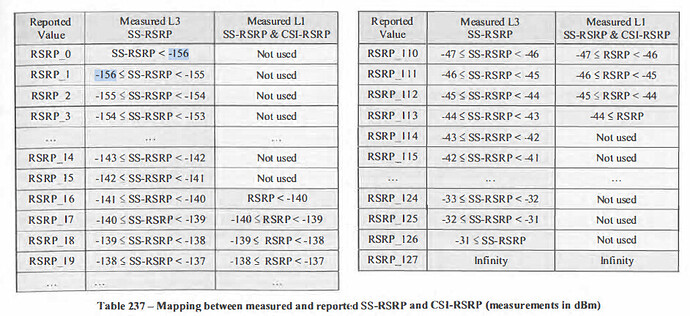

“L1 ss-rsrp range is -140 dBm to -44 dBm, while L3 ss-rsrp range is between -156 dBm and -31 dBm.”

I will give my ideas quoting some excerpts of the book “5G New Radio IN BULLETS”, by Chris Johnson:

- Reduction from -140dBms to -156dBms was introduced initially in LTE to cater for the coverage performance of enhanced Machine Type Communications (cMTC). The 3GPP specifications for LTE originally defined a minimum RSRP of-140dBm but that value was decreased to -156dBm within the release13 version of the specifications.

- Then, for 5G, the minimum Layer 3 value of -156dBm has been aligned with the minimum Layer 3 value specified for LTE.

- So, L3 Minimum Measured/Reported RSRP value is -156dBm, but L1 Minimum Reported value is -140dBm, which is viewed as sufficient for the purposes of Layer I procedures. Here, I’m assuming that L1 Minimum Measured is the same that L3, -156dBm.

- Given that there are 7 bits when signalling RSRP, it can be defined 128 entries: RSRP_0< -156, RSRP_1< -155, RSRP_2< -154… RSRP_125<-31. RSRP_126<-31, RSRP_127:Infinity. New maximum RSRP value could be -31dBm.

- So, L3 Maximum Measured/Reported RSRP value is -31dBm, but L1 Maximum Reported is -44dBm, which is viewed as sufficient for the purposes of Layer I procedures. Here, I’m assuming that L1 Maximum Measured is the same that L3, --44dBm.

- -31dBm used by 5G is viewed as sufficient when compared to the upper limit of -44 dBm used by LTE, taking into account that it considers 10 dB margin for UE receiver beamforming relative to LTE.

- For example, if the UE MEASURES a Layer 1 RSRP of -150dBm then it is REPORTED AS < -140 dBm, but the same case is MEASURED AND REPORTED AS L3 -150dBm.

Please feel free to correct, add info or comment.